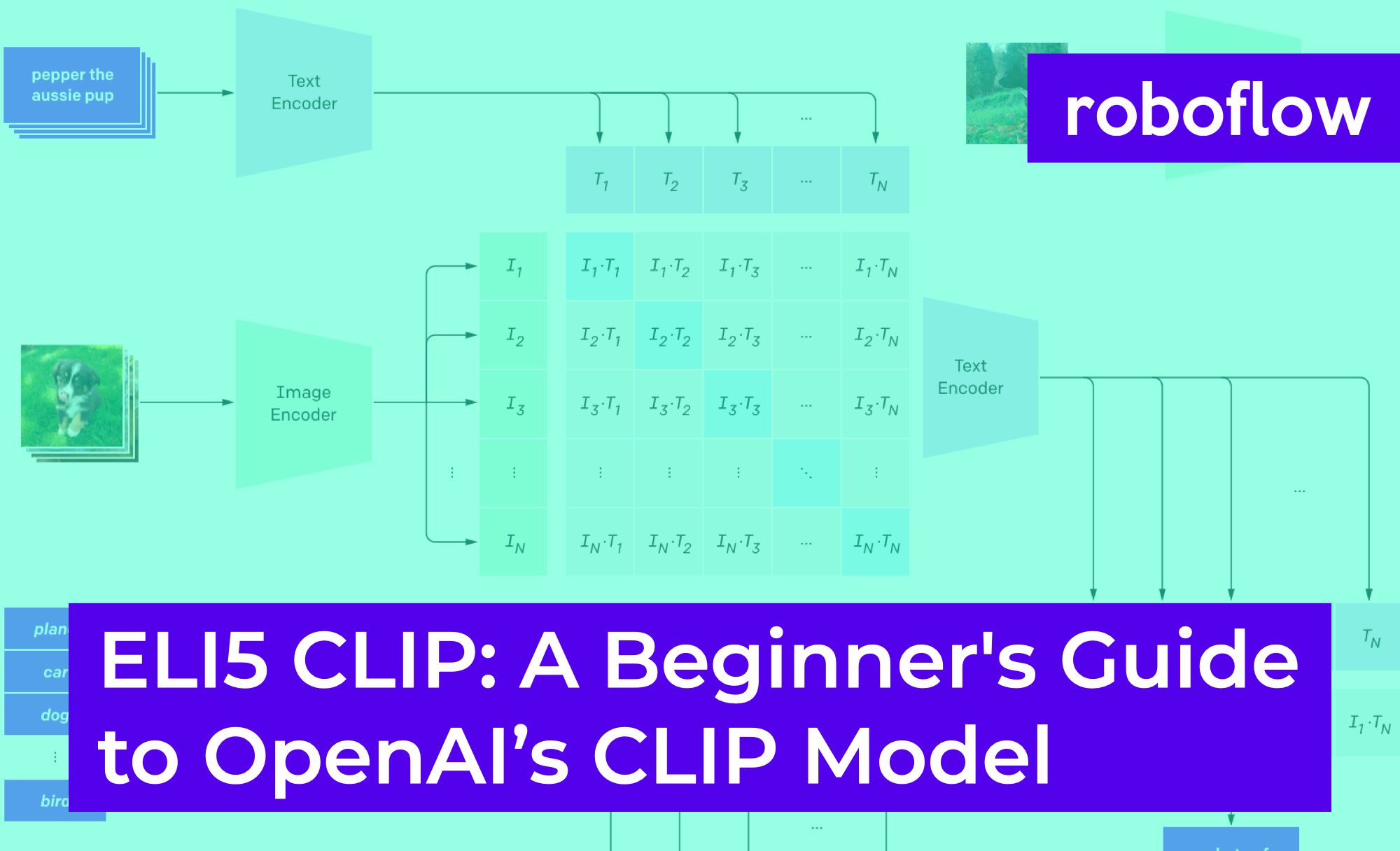

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

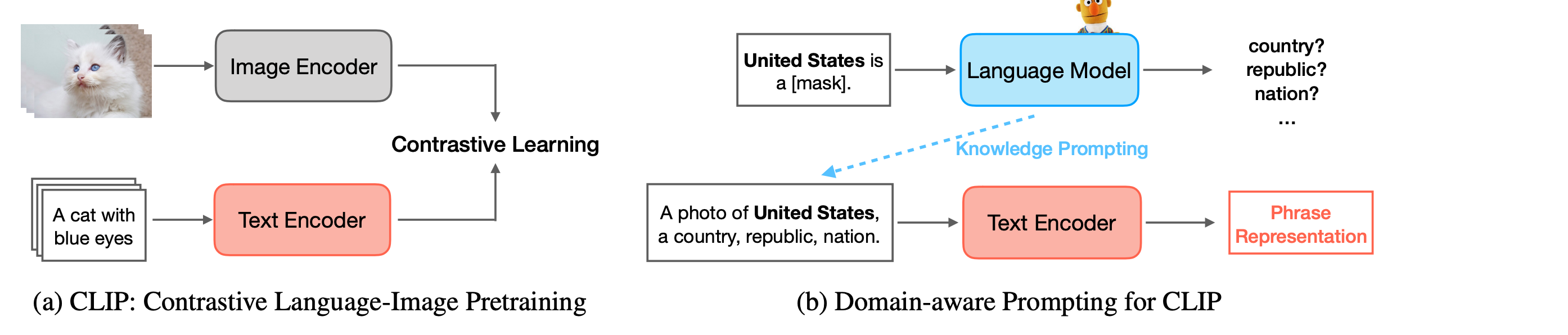

How do I decide on a text template for CoOp:CLIP? | AI-SCHOLAR | AI: (Artificial Intelligence) Articles and technical information media

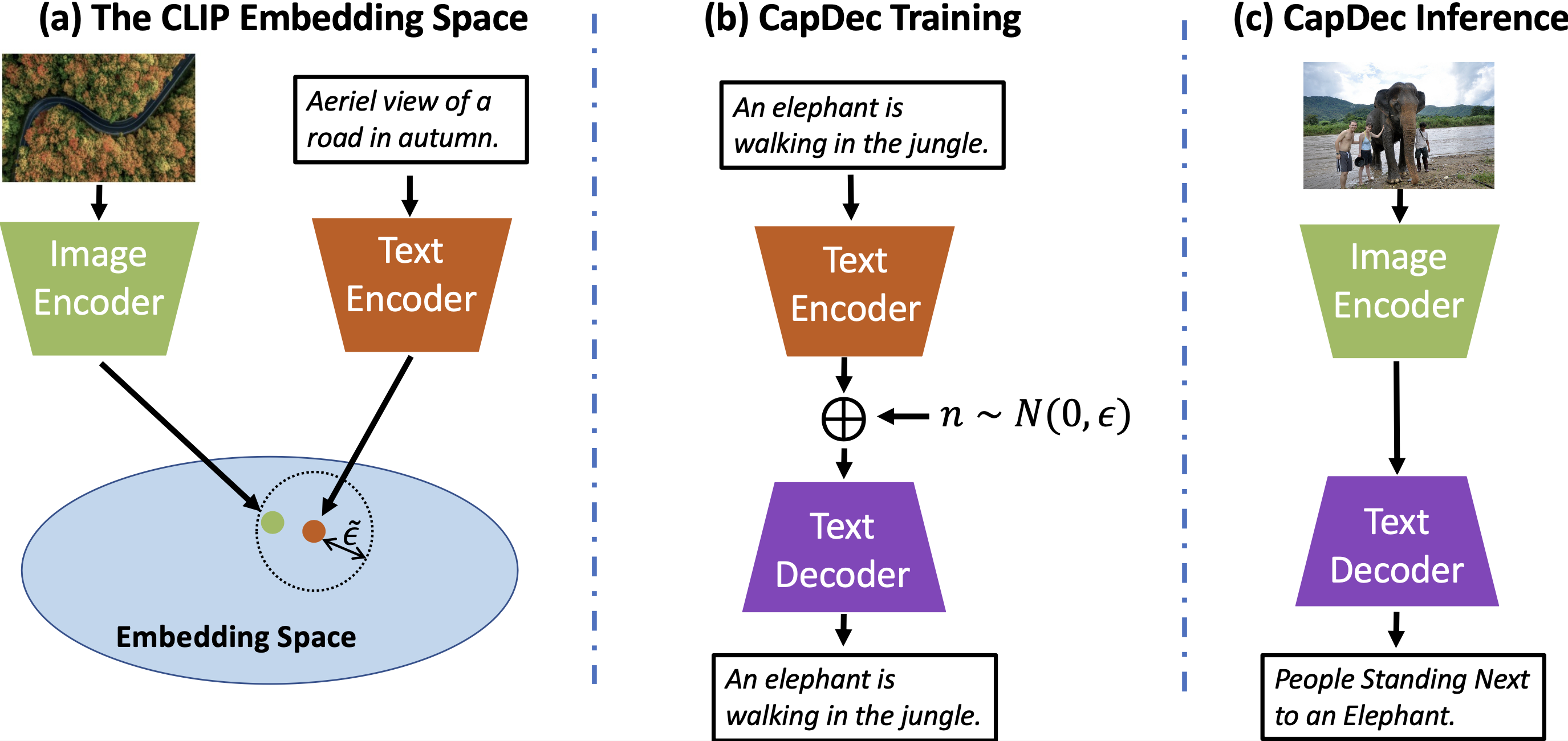

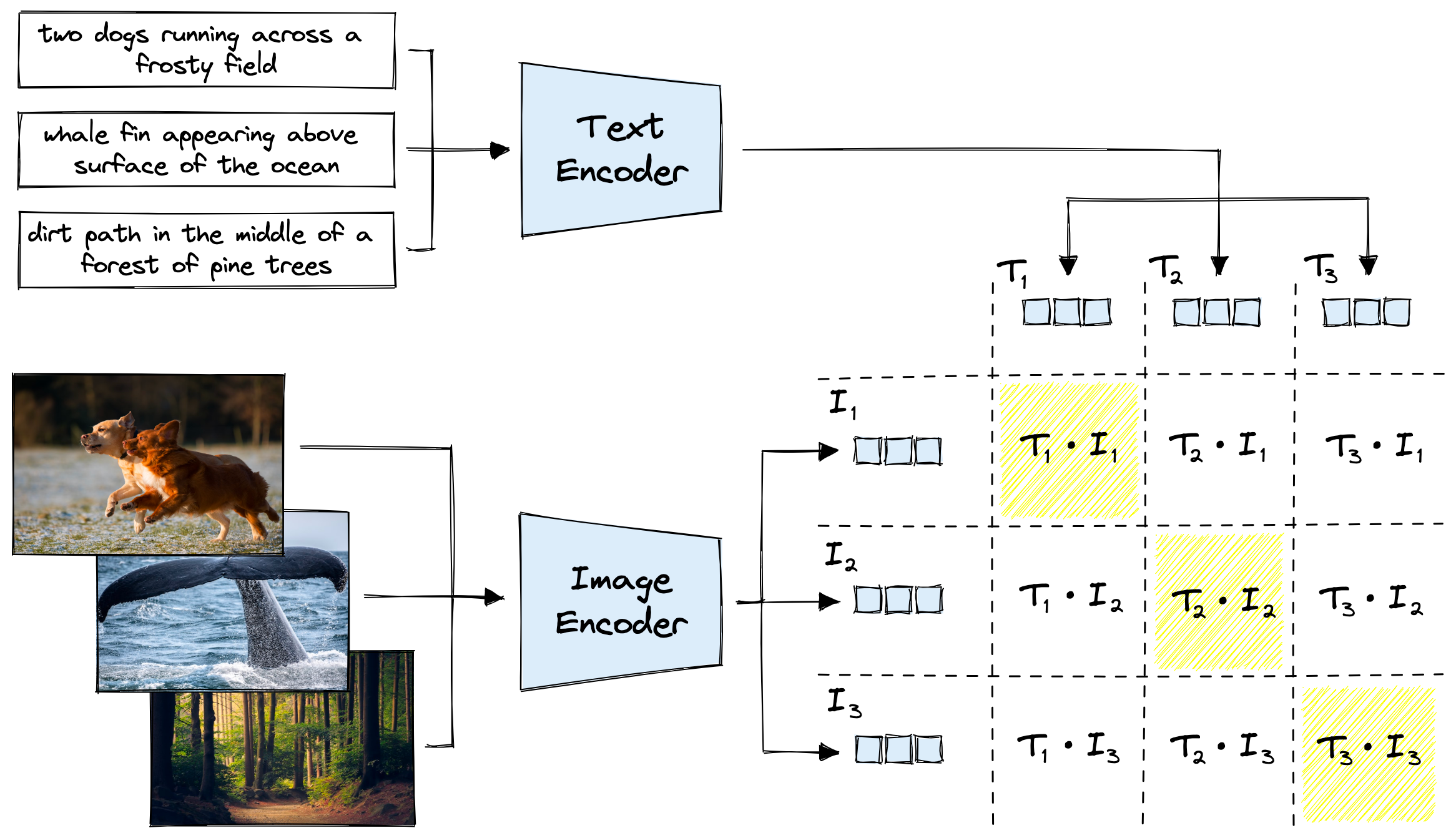

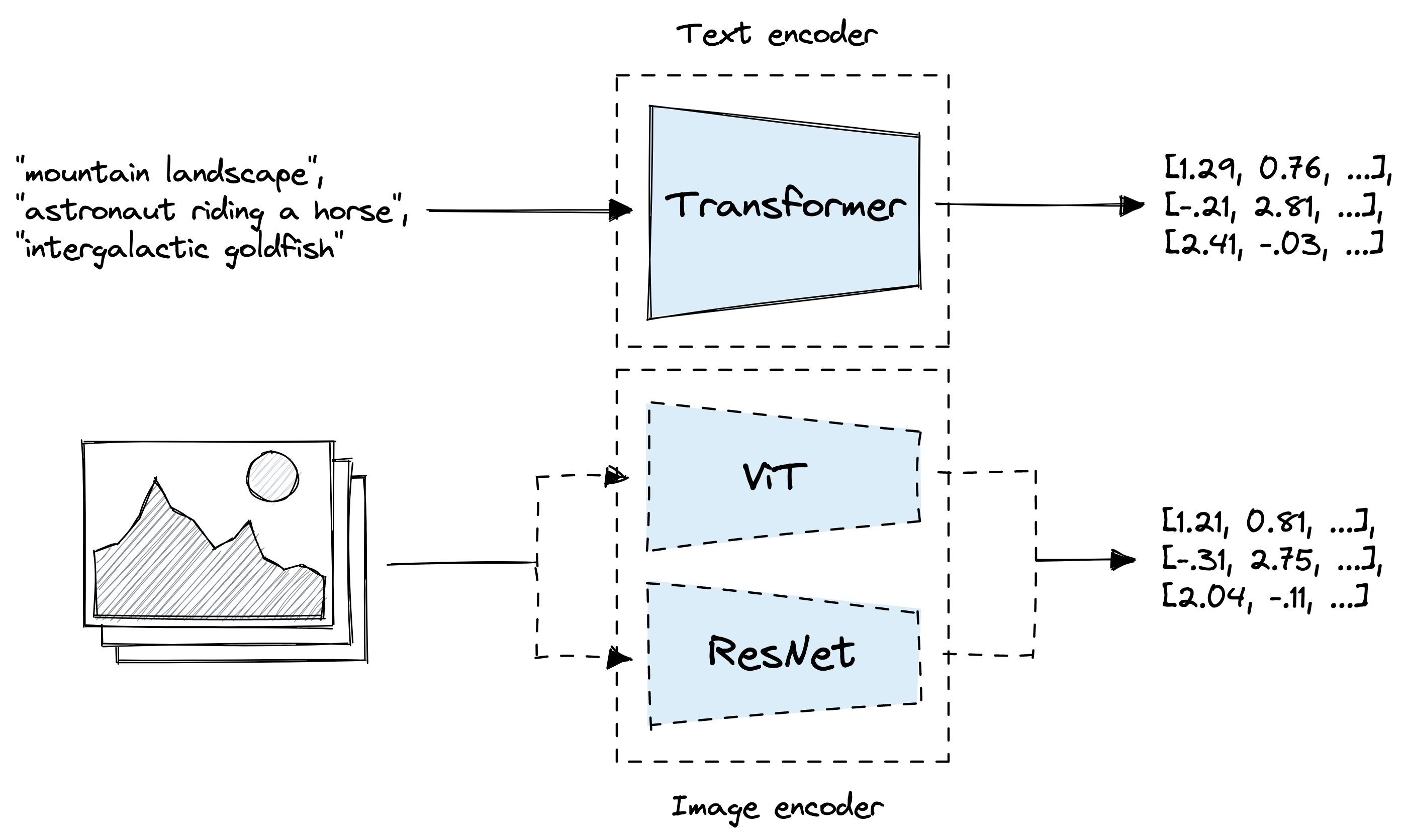

Example showing how the CLIP text encoder and image encoders are used... | Download Scientific Diagram

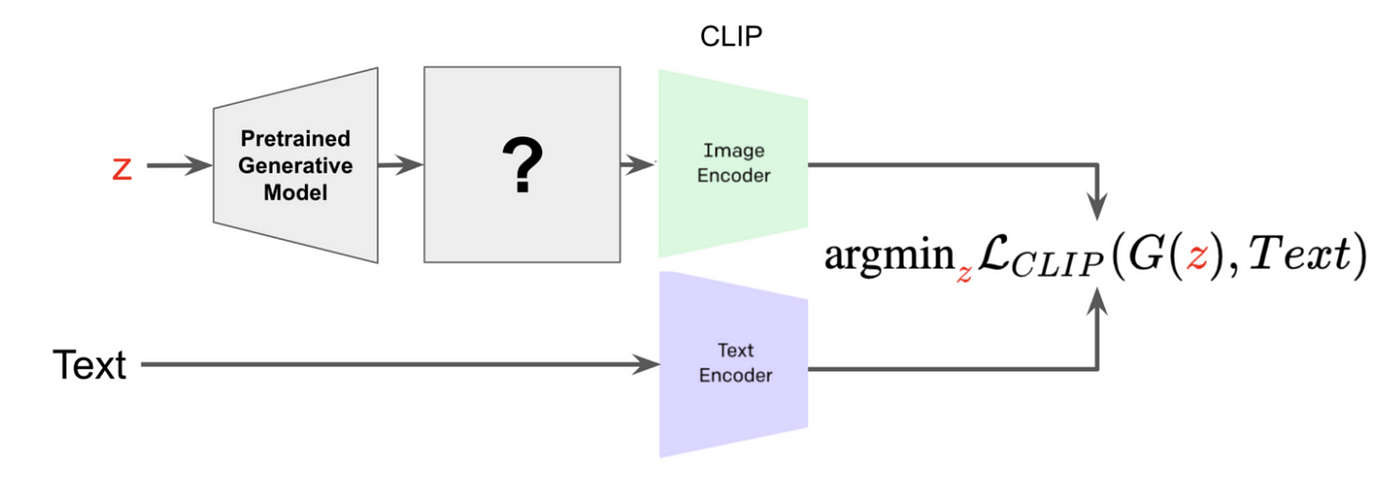

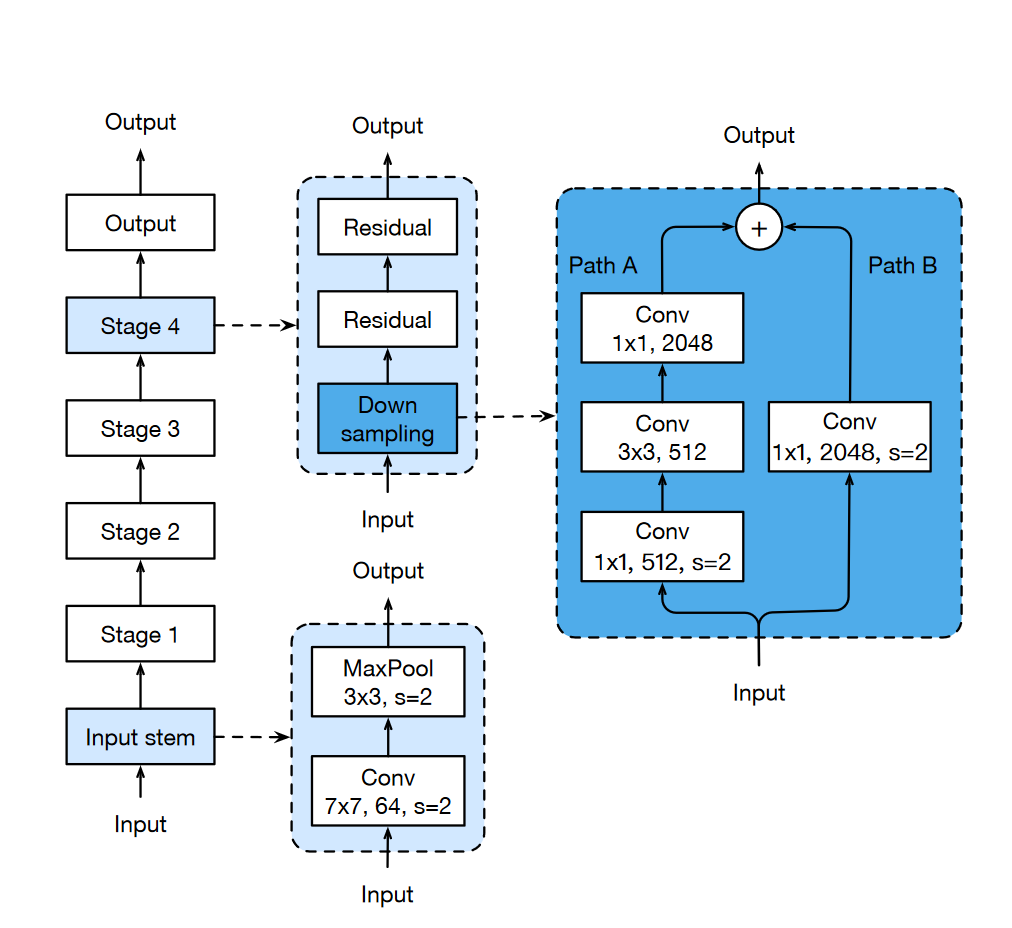

MaMMUT: A simple vision-encoder text-decoder architecture for multimodal tasks – Google Research Blog

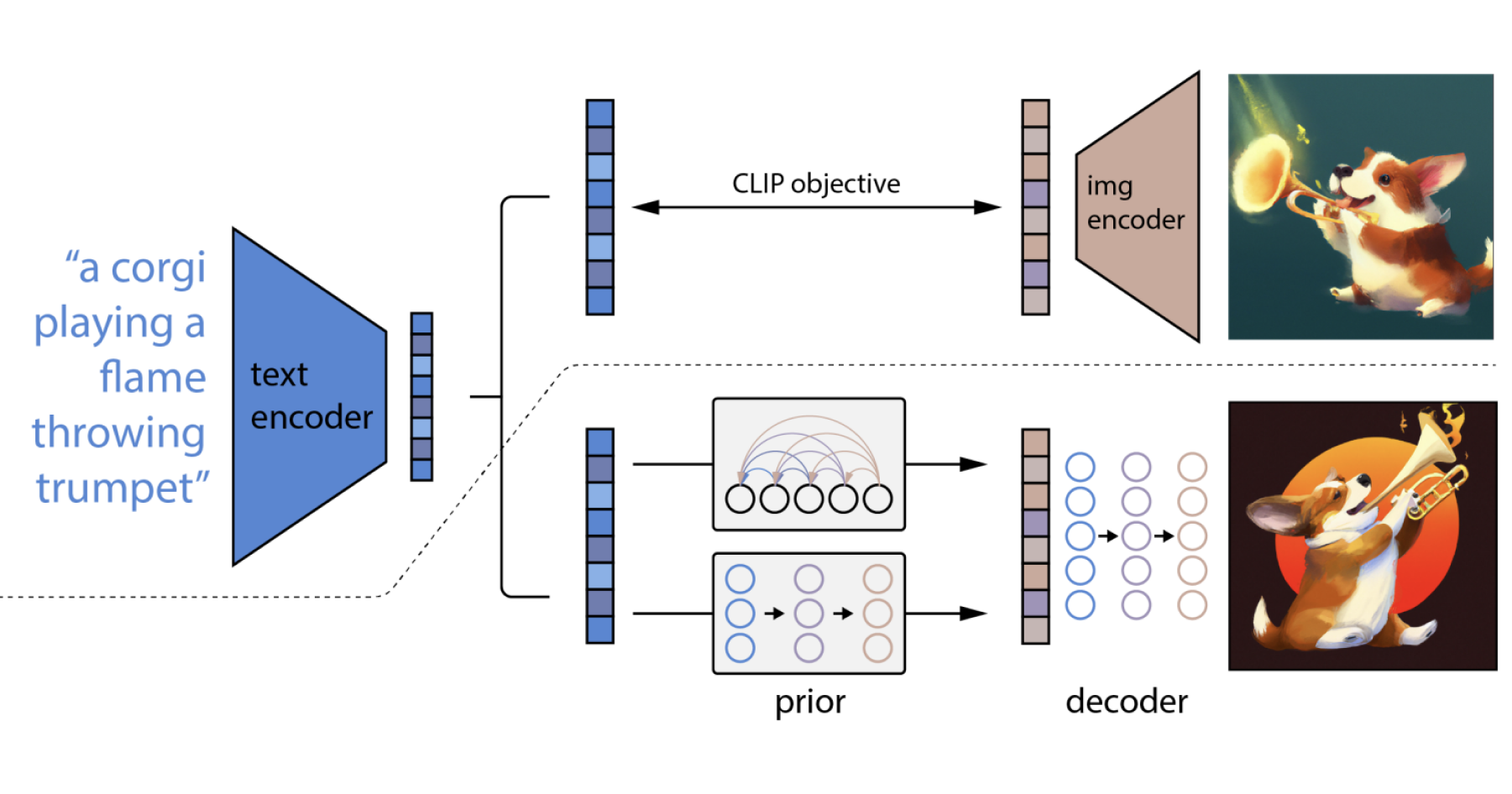

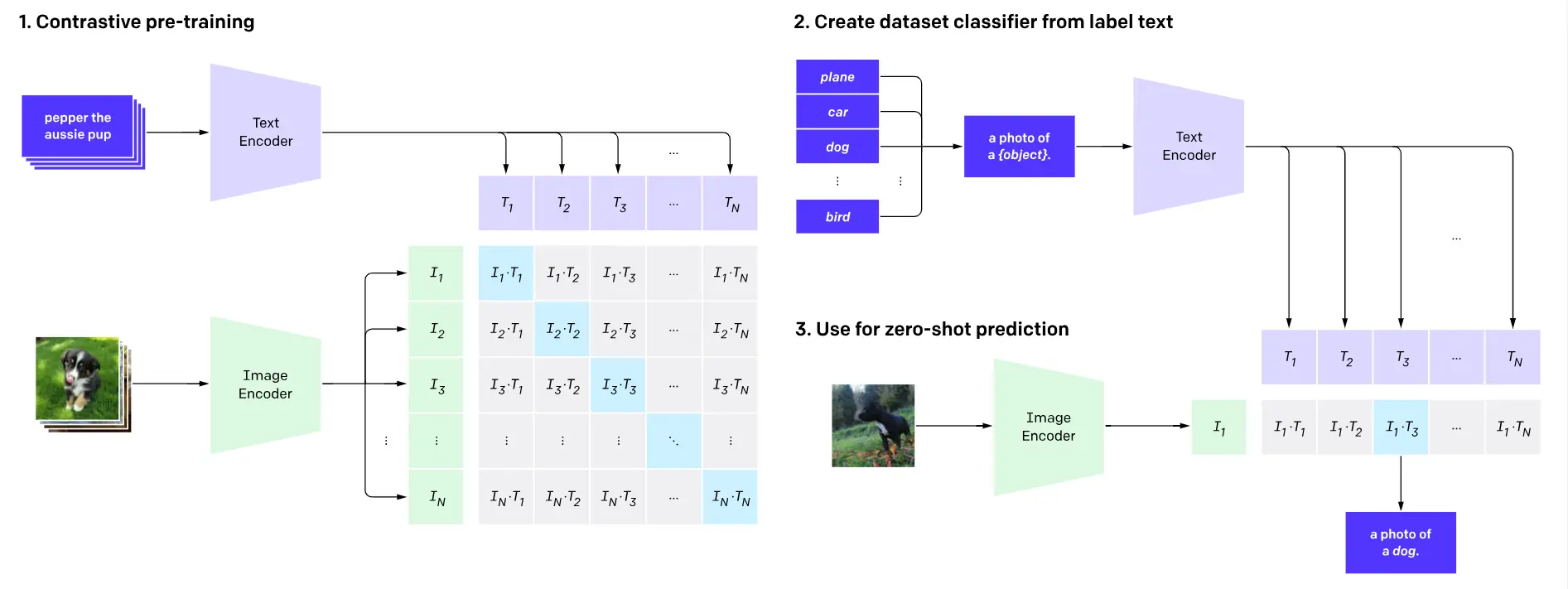

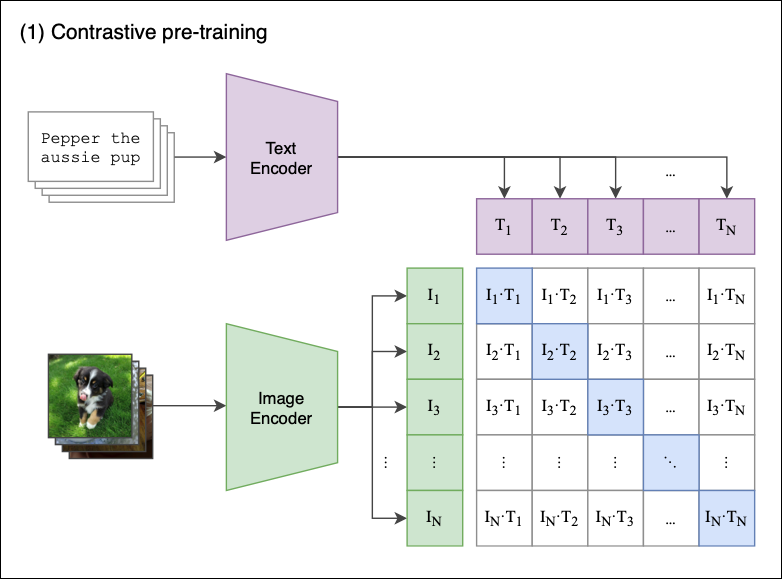

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

Romain Beaumont on X: "@AccountForAI and I trained a better multilingual encoder aligned with openai clip vit-l/14 image encoder. https://t.co/xTgpUUWG9Z 1/6 https://t.co/ag1SfCeJJj" / X

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

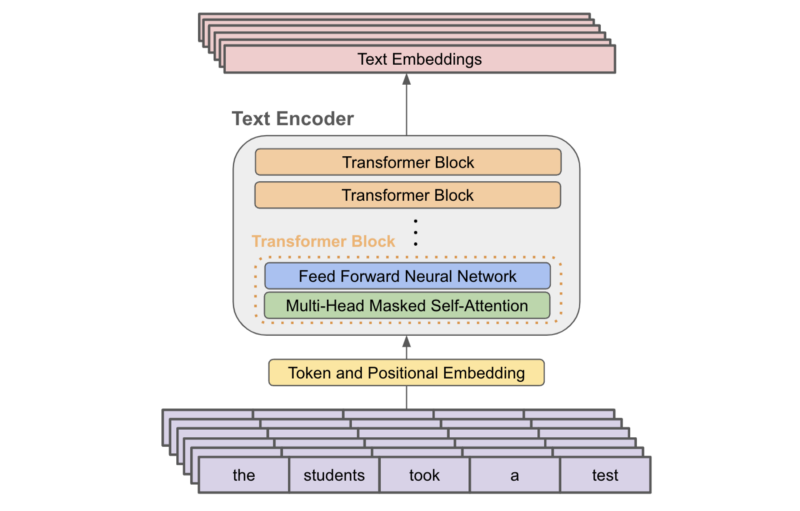

Overview of VT-CLIP where text encoder and visual encoder refers to the... | Download Scientific Diagram

Example showing how the CLIP text encoder and image encoders are used... | Download Scientific Diagram